In our review of the Radeon VII, Joseph Bradford did like the card while also stating,

“The question arises when you look at the whole picture of what the competition has to offer. The 1080 [T]i performs many of the same gaming tasks despite having released two years ago. The RTX 2080 is a comparable card in terms of relative performance and price, and has the added benefit of hardware DLSS and hardware ray tracing.”

This merely hints to my larger concern and frustration with AMD. I’m not going to suggest for one moment that the Radeon VII (henceforth, R7) is a bad card. It isn’t. My frustration here does not lie with the card, rather, with AMD.

I haven’t seen many other outlets discuss this, so I think it bears discussion here. Personally, I don’t care whose name is on the labeling. I want the best of the best hardware out there. So for me, the last few years have been incredibly frustrating to see an utter lack of competition in the enthusiast space.

Two years ago, they had no response to the 1080 Ti. And today, they have no response to the 2080 Ti. I fundamentally believe having only one company hold the enthusiast crown for so long is simply not healthy, most of all for consumers who pay the literal price.

With this in mind, this discussion will focus solely on the gaming applications of the R7 and why I believe there’s a looming flaw here for Team Red. The general performance roundups have more or less concluded with the R7 performing broadly similar to the 2080 — AMD’s self proclaimed direct competition graphics card.

But let’s look at this deeper because a fundamental point is being overlooked. AMD themselves are comparing the R7 directly to the 2080. But by extension, they are also comparing the R7 to the 1080 Ti. We know this because factual analysis shows that the 2080 and 1080 Ti effectively trade blows in traditional gaming applications.

Looking closer, the 2080 is built on a 12nm node. The 1080 Ti — a card from two years ago — is built on a 16nm node. On the power front, the 2080 TDP is 225W for the Founder’s Edition. The 1080 Ti TDP is 250W.

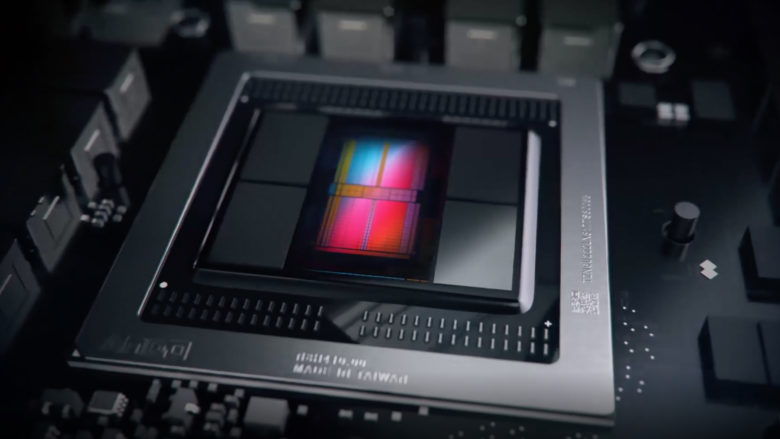

On the AMD side, the R7 is built on 7nm process and has a TDP of 300W. To put it bluntly, it took AMD two years to catch up to what Nvidia was doing on an older and theoretically less power efficient node.

Viewed another way, AMD had to move to 7nm to simply match what Nvidia was doing on 16nm from a performance perspective, yet AMD is still less power efficient two years later.

On top of all this, the R7 has no hardware accelerated ray tracing nor machine learning unlike its equally priced competition. The fact that the 2080 has these features for the same price automatically creates better value for their product. It’s a simple calculus. More features for the same price equals more value.

Some may provide their reasoning as to why they want an R7 by proclaiming that they don’t care about ray tracing. If that’s the case, then it shouldn’t matter if you buy the 2080, R7, or the 1080 Ti. They all have broadly equal performance so you should be fine with buying any of them. Stating you don’t care about ray tracing simply isn’t valid justification for why you would want to buy the R7 specifically.

It’s a better and more accurate argument to put forth that you are an AMD consumer who wants an upgrade and wishes to stay within AMD’s ecosystem. This is honestly the only reason I can think of why a gamer would want an R7 for gaming applications.

But this circles back to the point above. AMD had to move to a 7nm node simply to keep pace with Nvidia’s last gen flagship, the 1080 Ti. Remember, the 2080 is not the flagship of Nvidia’s current lineup. That would be the 2080 Ti.

So the looming question remains: What the hell is going to happen once Nvidia moves to 7nm?

In Turing, Nvidia ceded some die space in order to include genuinely next generation hardware to enable next generation rendering techniques — and despite this, they’re still able to easily match the rasterization performance in AMD’s R7.

Imagine if, instead of including truly next gen ray tracing and machine learning hardware, Nvidia chose to simply pack on more CUDA cores onto their Turing die. If the 2080 is able to match the R7 with its die space split amongst three cores, imagine how much faster than the R7 it would have been had they simply packed in more CUDA cores. Given the inherent efficiencies and optimizations afforded by Turing, it’s entirely within reason to state that a theoretical all-CUDA 2080 would have handily outperformed the R7.

Nvidia has managed to out-innovate, out-engineer, and out-architect AMD despite AMD moving to a theoretically far more efficient node.

This is what frustrates me so much about AMD. They say they’re working on ray tracing, but their self-labeled competition has it right now. And it’s not a fairy tale. Both Battlefield V and Metro Exodus include this technology. And from experience, it’s amazing. Metro Exodus is a true inflection point in our industry, and it’s because of this technology.

When it comes time for Nvidia’s next architecture, they will have had 18-24 months worth of data and learnings to draw from to further optimize and manufacture for ray tracing and machine learning. AMD won’t have that, potentially placing them even further behind.

It just seems no matter what AMD does, they are always behind. And I feel like this time, they’re behind in a big way.

So yeah, cool, AMD finally released a high end card. But I fundamentally believe it’s just not enough. It is still an objectively lesser product than their own self-labeled direct competition precisely because Nvidia’s card costs the same yet has the objective value add of hardware accelerated ray tracing and machine learning.

Crucially, the R7 is still no match for Nvidia’s flagship 2080 Ti. And against all this backdrop is the cold hard fact that Nvidia has yet to go down to 7nm. Once they do, I worry AMD’s current engineering, architectural, and innovation deficit will be greatly exacerbated. No matter how you look at it, it’s not good. I certainly hope AMD proves me wrong.